Agentic AI takes autonomy to new levels

Generative AI has shown an impressive ability to interpret and create text, sounds and images. These capabilities are being incorporated into robotic and other embedded-control systems. The changes will make these systems easier for end-users to interact with. Robots, for example, can listen for natural-language requests. A generative AI model then turns those requests into code or instructions that the motion-controlling hardware embedded inside the machine can execute.

Such a use case for generative AI is primarily reactive. The user needs to make these requests, and the AI model will translate them. That implies a need for frequent user intervention. Any time a behaviour change is needed, a human operator needs to initiate it. Agentic AI takes a more active role, relieving users of the need to monitor and steer the system constantly.

A quick introduction to Agentic AI

Agentic AI assembles generative AI components into a workflow that interacts with the world around it. The result is a system that acts autonomously based on observations of its environment, using AI models to plan activities with minimal human intervention. The key to agentic AI is the ability for a system to self-organise to meet high-level goals set by a user. Though this represents a major change in the way AI operates in a computer system, it is a natural evolution of generative AI.

Thanks to the rise in use of chatbot and copilot applications, the large language model (LLM) has become the most widely used form of generative AI. Trained on large bodies of textual information, the LLM uses a neural-network technology developed almost a decade ago. During training, this Transformer structure helps identify statistical relationships between all the words/tokens in the source text. When the model is deployed, it uses those relationships to infer connections between the words fed to it in a prompt and then to generate a coherent stream of words.

NEED SUPPORT? CONTACT OUR AI EXPERTS

In multimodal models, the Transformer concept has also successfully been applied to images and audio, making it possible for the model to learn the connections between them and use them to generate contextually relevant responses to prompts.

Just a few years ago, users would expect models to react to each prompt individually. A new prompt might include some context from earlier interactions. But the result of each interaction was a single pass through the LLM. The chief mechanism for improving the quality of results was to retrain on better data, possibly including the outputs from previous prompt-response cycles. But work by researchers showed that it is possible to coax better results from generative AI by making the models create their own prompts in response to a user’s high-level request.

The LLM can use this strategy to decompose problems into more manageable elements. Known as chain-of-thought (CoT) prompting, this method involves the user feeding the model with carefully worded prompts that tell the model to break the initial task down into steps[1]. The result is often a more accurate answer than one that would be generated by a better-trained model that provided the answer to the prompt within a single iteration[2]. This is why the technique is sometimes called inference-time scaling.

| Types of AI | Capabilities |

|---|---|

| Generative AI |

|

| Agentic AI |

|

| AI Agents |

|

Agentic AI takes advantage of CoT-like techniques to give generative AI models greater autonomy. The model takes on the role of an orchestrator that coordinates the work of other software-based functions in the systems, some of which will be other, more specialised AI models[3].

The CoT-like approach helps the model iterate through prompts generated by earlier prompts. The model may even try different strategies, each with its own flow of CoT prompts, and compare their results in order to create a response that it selects as the most appropriate, as shown in the left hand table. By operating in a feedback loop, the orchestrator can continually finetune its behaviour as it generates new instructions for the downstream functions.

Agentic AI can rely on external tools, such as symbolic mathematical proof engines and software verification tools, to check AI-model outputs for correctness. Such tools might reject a generated solution that is impossible to implement based on the problem’s constraints. The agent will, in response, generate new potential solutions until it finds one that is practical.

Iterative autonomy

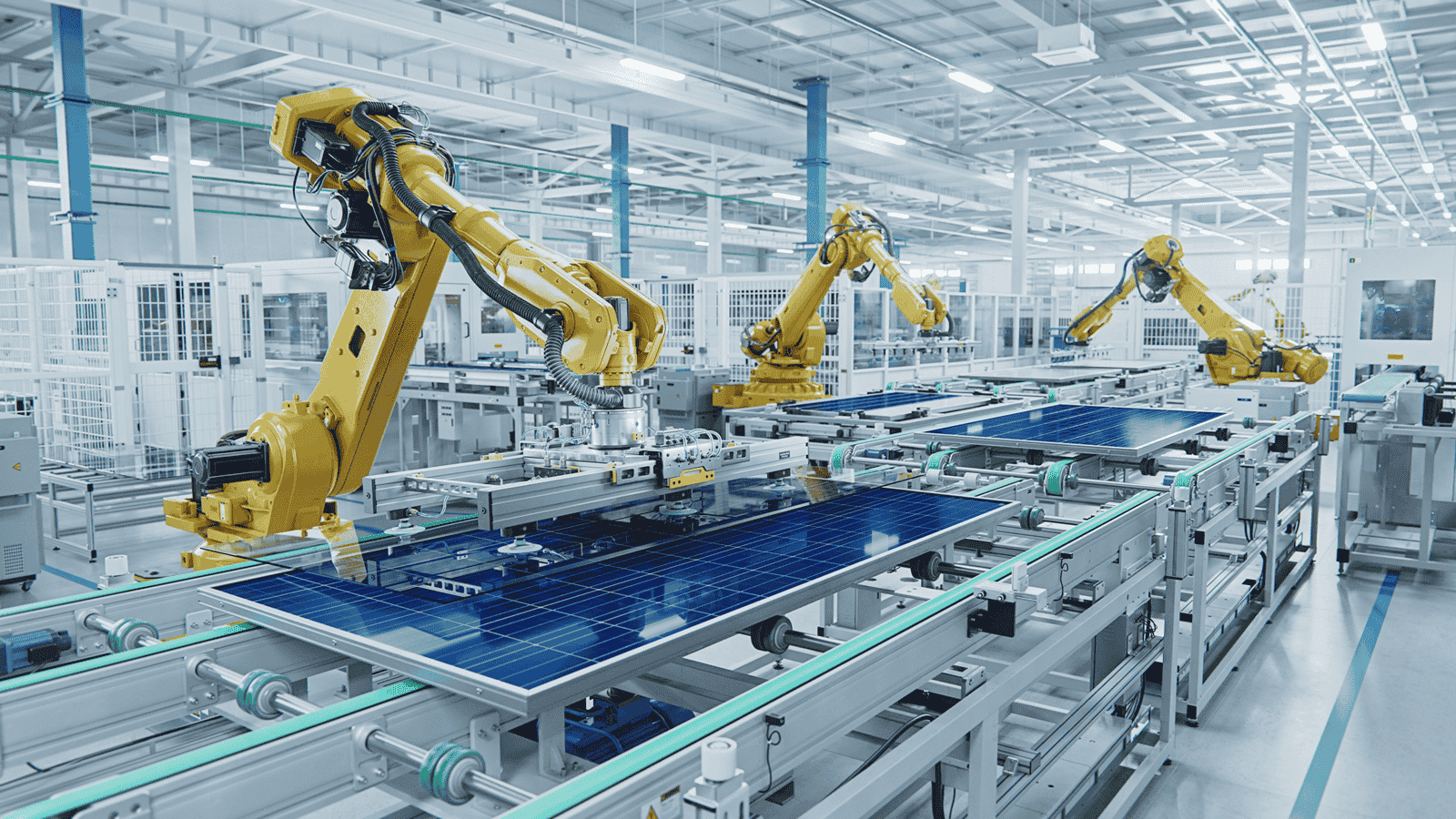

Agentic AI doesn’t need to be in control of the entire system. It can manage the system for a subset of conditions, asking for assistance from a human operator once the system is outside those bounds. Or it may handle a small number of operational requirements. One use case in industrial control is predictive maintenance, where an AI coordinates the delivery of status updates from machine tools and issues work requests without human intervention if it sees opportunities for repair with minimal downtime to the rest of the production line.

Conversely, the orchestration that agentic AI performs may be at a system-of-systems level. One example is in the coordination of multiple robots and drones’ activities, as illustrated in Figure 2. The generative AI may use maps and other incoming data to develop an efficient search or coverage pattern for multiple drones to cover. However, the individual robots maintain independent control. Each of which can use its own built-in AI models and control systems to plan its route and deal with problems such as turbulence and obstructions. If they encounter a problem that needs new guidance, they can send requests to the orchestrating AI so that new instructions can be generated.

Such higher-level coordination can improve the abilities of non-robotic systems to react to changing conditions. A smart traffic control system can analyse local patterns that might be affected by short-term conditions, such as roadworks or vehicle breakdowns, and adapt timing to reduce overall congestion. The in-cabin infotainment systems in those vehicles might incorporate their own agents to provide the driver with information with minimal distractions.

Technology

Artificial Intelligence Overview

Head over to our Artificial Intelligence overview page for more AI articles, applications and resources.

See the AI Knowledge Library

Head over to the AI Knowledge Library to see all of our AI and ML resources in one place. Explore articles, webinars, podcasts and more.

AI Hardware – Solutions for Edge, Centralised, and Everything In Between

AI workloads, from edge inference to large-scale model training, place unique demands on electronic systems. AI often combines significant computation, high memory bandwidth, and continuous data flows, while adhering to strict latency and power constraints. Engineers are required to design systems that can process sensor inputs, execute models, and deliver insights reliably, all while maintaining energy efficiency and thermal stability.

Each tier of AI deployment, from sensor nodes and edge devices to near-edge infrastructure and centralised data, presents distinct hardware considerations. Optimising performance requires careful balancing of resources while considering integration and communication with other systems.

The right hardware choices directly shape scalability, efficiency, and the viability of emerging use cases. Avnet Silica assists engineers in these critical decisions through its comprehensive expertise and a vast selection of innovative hardware, helping to drive the creation of AI systems throughout all application levels.

LEARN MORE

By considering changing circumstances on the road and their effects on safety, the AI may delay announcements or offer advice on route improvements. A surveillance camera with agentic capabilities may call on other devices to help determine whether anomalies need further attention. The smart camera sends commands to change the intensity and direction of nearby lights or request inputs from other nearby cameras or microphones. The agentic AI will then ingest the combined data. Its response may be to call on an LLM model to generate comprehensive, natural-language summaries that it can send to security personnel for review.

These different use cases provide a spectrum of options for implementation. Agentic AI that emphasises multi-system cooperation may run primarily in a cloud or edge server. But the device-level uses will often favour local processing to reduce the latency, energy and cost of communication with the cloud. Local processing provides further benefits in terms of being able to operate when the cloud connection is not available. It also helps deliver greater privacy and security.

Computational efficiency

Generative-AI models are usually much larger than the convolutional neural networks (CNNs) used for image recognition and the recurrent neural networks (RNNs) that interpret streams of one-dimensional data like audio. The size and the number of operations needed to generate each successive token impose high demands on the computational capabilities of any platform, whether it is running in the cloud or at the edge.

The use of CoT-like methods implies a significant increase in the number of tokens to be generated compared to the situation when an LLM is used to recognise or generate natural-language text or speech. The overhead climbs further if the model performs multiple loops of CoT to compare answers in order to pick what it estimates to be the best response.

Figure 1: The orchestration that agentic AI performs may be at a system-of-systems level. One example is in the coordination of multiple robots and drones’ activities.

For agentic AI to run at the edge or on embedded devices, models need to be optimised for higher throughput at lower computational cost and memory overhead compared to their cloud-based counterparts. The number of neuron weights that a model needs to process on each path affects both the memory capacity needed to store all the data as well as the number of calculations required to generate each token.

There are several strategies that enable lower memory usage and processing per token. For repetitive tasks, recent research suggests that smaller LLMs can deliver high accuracy, together with easing the process of tuning responses for specific situations, compared to using larger models that are better for handling a wide range of conditions, many of which may not apply to the target use-case[4].

Other techniques focus on changing the training regime to deliver a higher quality of responses for a given model size. These techniques have been harnessed by some developers to create generative-AI models suitable for edge and embedded computing. Some of these size-optimised models are derived from larger LLMs. TinyLlama is a derivative of Meta’s Llama2 LLM. Others have been custom-built. The Smol family, created by HuggingFace, which like TinyLlama are available as open source, were designed specifically to be compact LLMs. They deliver accuracy comparable to conventionally trained models several times their size.

Further optimisations for edge computing include weight quantisation, where the model parameters are encoded as low-bit integers (8-bit or smaller) instead of the 32-bit floating-point values commonly used during training. This both reduces memory footprint and provides opportunities to use lower-cost processor and accelerator hardware.

With a sufficiently fast set of models, local processing allows decisions to be made in real time, with more complex problems perhaps devolved to the cloud. One way of managing the separation of functions is to have a simple agentic AI running locally, but which defers to instructions relayed from a cloud-based model. Another approach is to use what has become known as speculative decoding. With this approach, a simple model runs locally, generating tokens that are then checked and possibly refined by a more capable model, which runs on a more powerful edge server or in the cloud.

AMD, NXP, Qualcomm, STMicroelectronics, Renesas and others have developed hardware with acceleration features that suit generative AI models at various levels of capability. Many of these platforms also support software, such as ROS2, which enables real-time control in robotics and other cyber-physical systems. The hardware platforms also support features such as secure boot and memory encryption to prevent attackers from corrupting the models or stealing important data.

Conclusion

By building on generative AI and other technologies, agentic AI represents a significant shift in the ability of many systems to act autonomously. Using advanced hardware coupled with software optimised for model throughput will continue to deliver increasingly powerful systems and, with them, greater efficiencies. It will be important to recognise the limitations of generative AI in the feedback loops of agentic AI, using additional tools to check and confirm their outputs. But careful development will ensure the beneficial deployment of this revolution in AI and autonomous systems.

Avnet Silica is uniquely equipped to help developers harness the power of agentic AI and generative AI. By providing advanced hardware, model optimisation techniques, ecosystem integration, and expert support, Avnet Silica enables the development of autonomous systems that are efficient, secure, and scalable.

References

[1] https://arxiv.org/abs/2201.11903

[2] https://arxiv.org/abs/2501.19393 https://arxiv.org/abs/2502.12215

[3] https://www.ibm.com/think/topics/ai-agent-orchestration https://formant.ai/blog/the-transformative-future-of-ai-in-robotics

Working on an Artificial Intelligence project?

Our experts bring insights that extend beyond the datasheet, availability and price. The combined experience contained within our network covers thousands of projects across different customers, markets, regions and technologies. We will pull together the right team from our collective expertise to focus on your application, providing valuable ideas and recommendations to improve your product and accelerate its journey from the initial concept out into the world.